13 Best Elasticsearch Alternatives For Smart Search

Nov 25, 2025

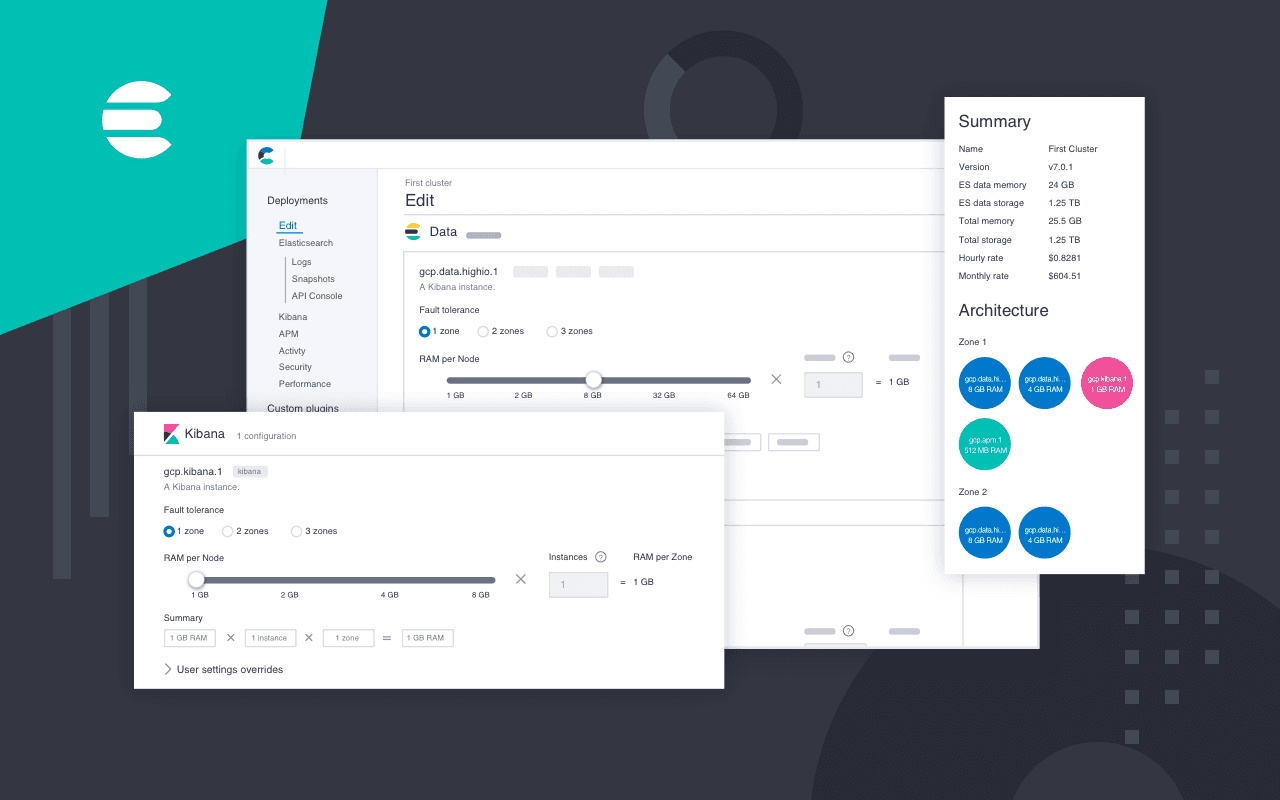

Daniel Dultsin

When your team cannot find the proper documents or search results feel off, your Knowledge Management Strategy breaks down, and productivity drops. Choosing the right search engine matters for relevance, indexing, scalability, and cost, whether you are weighing OpenSearch, Apache Solr, Algolia, MeiliSearch, Typesense, Sphinx, or other full-text and vector search options. This guide lays out clear comparisons of alternatives to Elasticsearch, covering search relevance, semantic search, embeddings, and query performance so you can pick the solution that fits your goals. What trade-offs matter most for your data size, latency needs, and relevance tuning?

Coworker's enterprise AI agents can scan your content, compare how each search option performs across your use cases, and deliver simple, prioritized recommendations to help you gain a clear, well-rounded understanding of the top Elasticsearch alternatives

Summary

The incumbent search infrastructure still dominates usage, powering over 80% of search queries and handling more than 2 billion queries per day, which encourages teams to default to familiar tooling even as tradeoffs compound.

Hidden operational costs drive migration decisions, with 30% of users reporting they switched because of high ops costs caused by snapshot, replica, and index churn that multiply storage and I/O bills.

Scaling problems become business problems, with 25% of teams citing scaling difficulty as a reason to switch, and migrations showing emergency maintenance can rise to three events per month while engineers spend 10 to 15 hours per week triaging.

Cost pressure is prompting re-evaluation, as over 60% of companies are considering alternatives due to TCO concerns; decisions must therefore be validated with multi-year total cost models rather than node price alone.

Practical evaluations should start with measurable SLAs, notably P99 tail latency, precision, or MRR for decision-driving queries, and human operating cost in hours per week, captured over two weeks of real traffic to avoid optimistic benchmarks.

Vendor and ecosystem risk matter for long-term bets, with market analysis projecting the incumbent's share could shrink by about 15% by 2025, making roadmap and support stability a material factor in platform choice.This is where Coworker's enterprise AI agents fit in. They address this by scanning content, comparing search options against real queries and metrics, and delivering prioritized recommendations for migration and configuration.

13 Best Elasticsearch Alternatives For Smart Search

I lay out 13 practical Elasticsearch alternatives you can choose from based on scale, latency tolerance, privacy, and whether you need vector or semantic search. Below each entry, I explain where it wins, the tradeoffs you should expect, and the scenarios that make it the more intelligent choice.

Per Algolia Blog, 2025, "Elasticsearch powers over 80% of the world's search queries. Elastic’s ubiquity explains why so many organizations default to it. Still, as traffic patterns diverge and teams demand semantic recall or lower ops overhead, different engines become better fits. And since Algolia Blog, 2025, "Elasticsearch handles over 1 billion searches per day", production scale and cost are practical constraints that push teams to evaluate other architectures.

1. Coworker

Coworker redefines enterprise AI by functioning as a genuine work partner rather than just a basic assistant. Powered by its proprietary Organizational Memory (OM1), Coworker excels at understanding, researching, planning, and executing complex workflows across an organization's entire technology stack. This AI agent provides perfect organizational recall, deep context awareness, and multi-step task execution, addressing the unmet needs in traditional search tools that only offer surface-level results or isolated automations.

Key Features

Perfect Organizational Recall: Instantly access company knowledge with semantic search tailored to your role and projects.

Cross-Functional Synthesis: Connects insights across various teams, departments, and time periods for holistic understanding.

Multi-Step Work Execution: Goes beyond search to help plan, automate, and track complex workflows across 25+ enterprise apps.

Relationship Intelligence: Maps connections between people, projects, and problems to streamline collaboration and decision-making.

Enterprise-Grade Security: SOC 2 Type 2 certified protections and respects existing permissions with no elevation required.

Pros

Deep contextual understanding far surpasses keyword-based search tools

Reduces up to 50% of time wasted on information synthesis and repetitive tasks

Rapid deployment within 2-3 days compared to weeks for traditional enterprise search platforms

Supports large organizations scaling from hundreds to thousands of employees seamlessly

Transparent and competitive pricing model aligned with enterprise budgets

Best For

Large enterprises require unified search and work automation across many tools

Sales and customer success teams want real-time insights and deal acceleration

Product and engineering departments need automated documentation and task tracking

Organizations aiming to improve cross-team collaboration and knowledge retention

Technology buyers looking for an enterprise-ready AI with robust security and compliance

2. Meilisearch

Meilisearch is an open-source, ultra-fast search engine that delivers speedy, relevant results with minimal configuration. It excels in delivering results in under 50 milliseconds out of the box, which is significantly faster than Elasticsearch in many scenarios. Meilisearch stands out with features such as automatic typo correction and a hybrid search engine that combines full-text and semantic search. Its low-maintenance design appeals especially to developers and businesses looking for hassle-free search experiences.

Key Features

Typo tolerance that automatically corrects misspellings and improves result relevance

Hybrid search combining keyword and semantic search to enhance accuracy

Built-in faceted filtering is perfect for e-commerce and large datasets

Easy setup with SDKs supporting multiple programming languages

Cloud and self-hosting options, with affordable plans for smaller teams

3. Algolia

Algolia provides an AI-powered search and discovery platform ideal for businesses needing quick, precise, and customizable search. It leverages machine learning algorithms to optimize relevance and supports rich features, such as advanced typo tolerance and merchandising tools, to personalize search experiences. Beginners appreciate Algolia’s straightforward, user-friendly interface, even as it offers powerful capabilities suited to e-commerce and media platforms.

Key Features

Search analytics to identify top-performing content and optimize results

Extensive typo tolerance and synonym management for precise queries

Merchandising Studio to build customer-centric personalized search experiences

Integrations with major CMS and e-commerce platforms (Shopify, WordPress)

API support for multiple languages (.NET, Python, PHP)

4. Typesense

Typesense is an open-source search engine focused on simplicity, speed, and cost-effectiveness. It offers real-time, as-you-type search with intelligent relevance ranking powered by AI. Typesense supports faceted, semantic, and recommendation-based search without complex setup, making it attractive to startups and e-commerce businesses.

Key Features

Instant search with auto-completion and relevant ranking

Faceted filtering and semantic search capabilities for refined results

Personalized recommendations based on user behavior

Free self-hosted option and affordable managed hosting plans

Multi-language API clients, including JavaScript, Python, and Ruby

5. Apache Solr

Apache Solr is a veteran open-source search platform built on Apache Lucene. It offers powerful distributed search with excellent scalability, making it suitable for large-scale enterprise applications. Solr features a comprehensive user interface, rich documentation, and a supportive community. Its strength lies in handling complex, full-text search queries and providing seamless integration with databases.

Key Features

Standards-based open interfaces using JSON, XML, and HTTP for easy integration

Robust monitoring and metrics via JMX for performance insights

Scalable distributed architecture suitable for heavy workloads

Built-in UI for administration and query exploration

Extensible plugin system supporting MySQL, Drupal, Magento, and more

6. Vespa

Vespa.ai is a search engine optimized to handle large datasets at scale, powered by AI. Combining lexical, vector, and structured data search, Vespa enables real-time, scalable recommendations and insights. Its advanced machine-learning ranking and automatic scalability make it ideal for sectors such as healthcare, finance, and e-commerce, where both speed and accuracy are critical.

Key Features

Machine learning-based ranking tailored to diverse use cases

Automated linear scalability to accommodate growing data loads

Continuous improvements with safe deployment practices

Powerful support for hybrid search across multiple data types

APIs compatible with popular programming languages like Java, C++, and Go

7. Xapian

Xapian is an open-source search library written in C++ with bindings for other languages like Python, PHP, and Java. Unlike standalone search engines, Xapian is integrated directly into applications, giving developers high flexibility for building custom search solutions. It supports advanced features such as phrase searching and relevance feedback, but lacks machine learning personalization.

Key Features

Highly portable across operating systems, including Windows, Linux, and macOS

Precise phrase and proximity search capabilities

Relevance feedback that refines query terms for better results

Multi-language bindings for easy integration

Compatible with databases like MySQL and CMS platforms such as WordPress

8. Bleve

Bleve is a modern Go (Golang) indexing and search library that enables developers to embed search functionality directly into their apps. It supports schema-free indexing of JSON documents, versatile text analysis in many languages, and standard search scoring techniques. Bleve’s simplicity and open-source licensing make it attractive for projects built on Go.

Key Features

Embedded full-text search engine requiring no external service

Advanced search types, including Boolean, phrase, proximity, and geo-spatial searches

Built-in text analyzers for dozens of languages

Schema-free JSON document indexing

Integration with popular Go web frameworks like Echo and Fiber

9. Sphinx Search

Initially built for MySQL, Sphinx has evolved into a high-performance, standalone search engine popular for efficiently indexing large datasets. It supports real-time indexing, distributed search across servers, and requires fewer system resources than Elasticsearch. Its strong SQL database integration makes it a good fit for organizations that rely heavily on relational data.

Key Features

Native support for MySQL, SQLite, and ODBC databases

Distributed search capabilities for load balancing and redundancy

Efficient resource usage, allowing deployment on modest hardware

Customizable relevance through field weighting and proximity control

Real-time indexing for fast updates on dynamic datasets

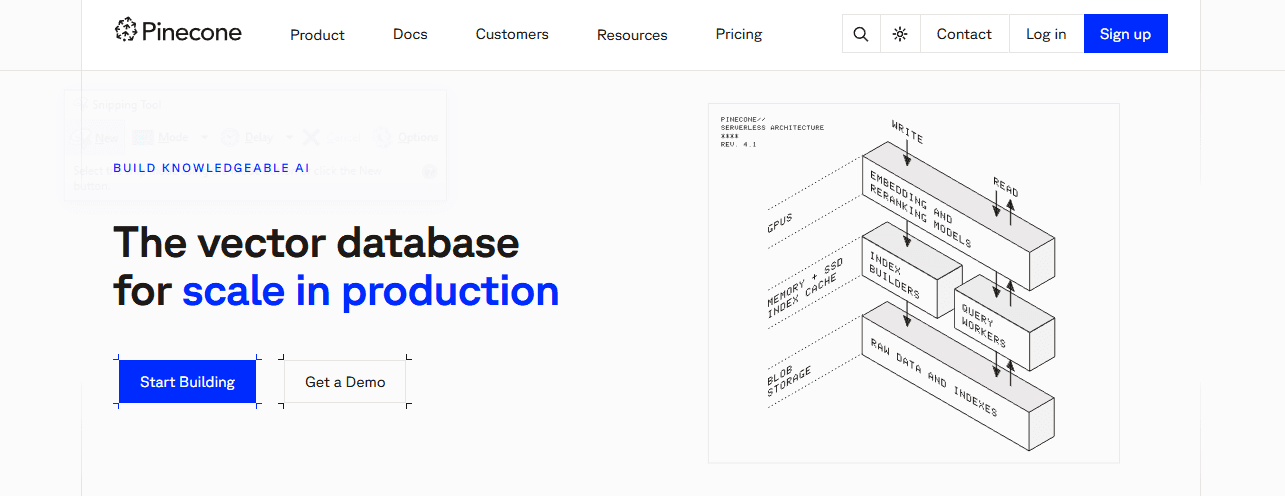

10. Pinecone

Pinecone is a specialized vector database designed for similarity search, making it ideal for AI-powered applications that use natural language processing and deep learning. It provides a hybrid search that combines semantic vector search with traditional keyword search to enhance result relevancy. Pinecone is easy to integrate with popular AI tools and cloud services, but is available only as a managed cloud service.

Key Features

Support for hybrid vector and keyword searches

Retrieval augmented generation (RAG) for building advanced AI chatbots

Filtered vector search for personalized recommendations

Easy API integration with tools like Hugging Face and AWS

Scalable cloud infrastructure with pay-as-you-go pricing

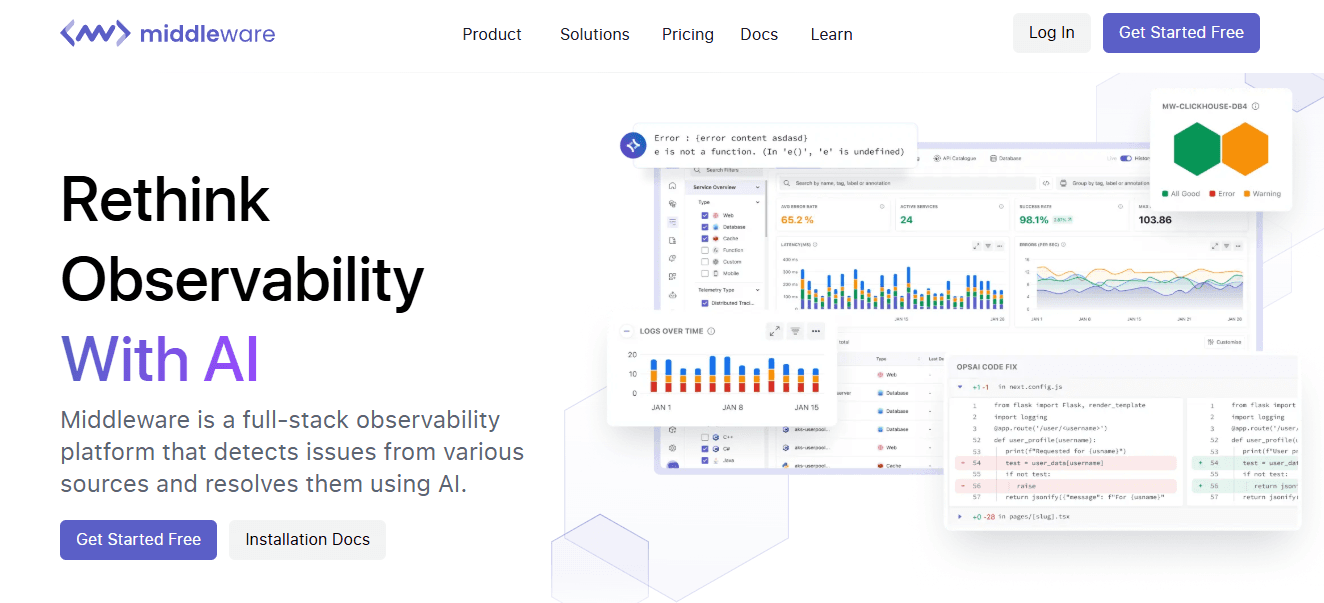

11. Middleware

Middleware is a full-stack observability platform that monitors and optimizes search engine performance in real time. Although not a search engine itself, it serves as a vital tool for detecting failures, analyzing user behavior, and alerting on indexing or query performance issues. It integrates seamlessly with popular search engines such as Meilisearch and Algolia to ensure smooth search operations.

Key Features

Detects query failures and system downtimes proactively

Provides insights into user search intent through click and trend analytics

Real-time alerts for errors, slow queries, and indexing problems

Integrates with cloud platforms such as AWS, Azure, and Google Cloud

Affordable pricing with a free tier for basic monitoring

12. Denser.ai

Denser.ai is a cutting-edge AI-native search platform that delivers highly accurate, context-aware search results by understanding the intent behind queries. Unlike traditional keyword matching, it uses semantic AI and Retrieval-Augmented Generation (RAG) to continuously update its index in real time, ensuring fresh and relevant content. Denser.ai is ideal for businesses that need dynamic search capabilities across customer support and e-commerce, and it supports effortless integration via chat widgets, REST APIs, and embedded iframes.

Key Features

Real-time indexing and data updates via Retrieval-Augmented Generation (RAG)

Semantic AI for understanding query intent and meaning

Automatic data cleaning to remove noise and duplicates

Continuous learning from user interactions to refine results

Simple integration with multiple platforms through chat widgets and APIs

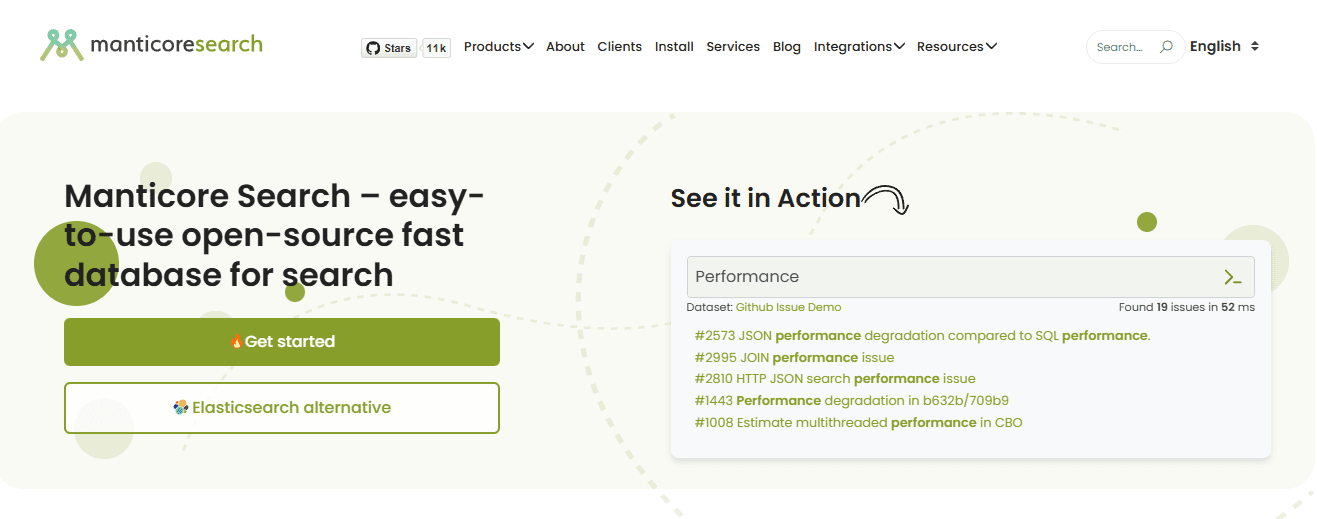

13. Manticore Search

Manticore Search is a developer-friendly, open-source search engine designed for high speed and extensive search capabilities. Support for SQL-like queries helps developers familiar with databases interact with the search engine with ease. Manticore’s real-time indexing and full-text search make it suitable for applications that require fast, scalable search while providing fine-grained control over ranking and relevance. Although it is highly performant, some users report occasional index stability issues.

Key Features

Blazing fast search performance: up to 15x faster on small data sets

Real-time indexing and query processing

Over 20 full-text operators and ranking factors for complex queries

Support for multiple data formats and SQL-compatible query language

Open-source and free to use, with strong community backing

How Should Teams Choose Between These Options?

Match capability to the problem, not prestige. If you need instant frontend latency and minimal ops, go with Meilisearch or Typesense. If you need managed merchandising and analytics, Algolia is a good fit. For vector-first AI retrieval, Pinecone or Denser.ai are better starting points. For heavy customization and in-house control, Solr, Vespa, or Xapian make more sense. Think in terms of query types, data velocity, security constraints, and the human workflows you must support.

Why Raw Search Often Fails At Scale

Most teams keep patching keyword indexes and stitching results into dashboards because that approach is familiar and fast to ship. As users, datasets, and decision owners multiply, relevance drifts, context is lost across tools, and manual handoffs consume time. Platforms like enterprise AI agents change the equation by stitching organizational memory with secure connectors and actionable workflows, so search becomes a trigger for planning and execution rather than a dead-end result.

A Quick Reality Check For Product And Engineering Leaders

When you choose a search engine, write down the nonfunctional requirements first: expected query volume, uptime targets, data privacy needs, and whether answers must connect to workflows. Treat integrations and permission models as primary constraints, because reworking those later costs far more than switching the indexing engine itself. Search is a tool and a behavior; if your index cannot remember projects, tie results to people, or start work for a user, it is only half a company brain. Keep reading to uncover how that half was built, and why it matters next.

What is Elasticsearch?

Elasticsearch is a powerful engine for teams that need fine-grained control over indexing, relevance, and real-time query performance. Still, it asks for ongoing operational discipline to keep latency low and costs predictable. It pays off when you can commit engineering time to tune JVM, shards, and ingestion patterns; otherwise, the complexity becomes the problem you were trying to solve.

How Do Teams Keep Predictable Latency In Production?

When we moved a high-volume telemetry pipeline to Elasticsearch over three months, the pattern was clear: latency stays steady until periodic tasks and merge pressure collide, and then tail latency spikes. The root causes are usually JVM heap pressure, aggressive segment merges, and unexpected background I/O. Practical levers that actually change outcomes are sensible heap sizing (leave room for the OS page cache), deliberate refresh and flush policies during bulk loads, and careful threadpool and circuit-breaker settings so queries don’t compete with indexing work.

What Silently Inflates Costs And Risk?

Pattern recognition across customers shows the same failure mode, whether it is logs, product search, or analytics: index churn and undersized nodes create a debt that compounds. Small inefficiencies in mapping, tiny shards, or heavy stored fields multiply into disk, CPU, and snapshot costs. The misconception that only concurrent users matter is costly, because computation per request and snapshot/replica overhead often dominate cloud bills as data grows. How big is Elasticsearch in the wild, and why does that matter?

The platform’s enterprise footprint explains why mastering its ops matters: according to Syone (2025), Elasticsearch is used by more than 80% of Fortune 500 companies, and many large organizations rely on it as core infrastructure, which raises the bar for security, compliance, and uptime. At a global scale, the pressure is real since 01net 2025, Elasticsearch has handled over 2 billion queries per day, and tiny inefficiencies become noticeable costs when that many requests run continuously.

Most teams manage this by bolting monitoring and runbooks on top of their clusters, because that feels achievable and familiar. But as indexes, teams, and permission boundaries multiply, that approach breaks down: alerts create triage work, dashboards only show symptoms, and engineering time drains into firefighting. Solutions like enterprise AI agents change the framing, not by replacing search, but by linking search results to organizational memory and automated steps, so context transfer stops being manual, and decisions move faster.

What Actually Fails When Projects Scale Beyond Prototypes?

Constraint-based thinking helps: if you need sub-100ms tail latency for user-facing search and you can afford dedicated ops, Elasticsearch remains a fit because you can tune everything. When you need cross-app context, role-aware recall, and automated follow-through without adding a large ops team, raw indices stop solving the human problem. That failure mode is visible in timelines: indexes remain accurate, but people still spend hours stitching answers together across tools, and project momentum slows. Think of Elasticsearch like a high-performance kitchen: with skilled chefs and a whole crew, it turns out incredible meals quickly; with only one cook, it becomes a burned mess,s and nobody eats on time. But the more challenging part, the one that makes teams rethink everything, shows up next.

Related Reading

Why Do People Switch From Elasticsearch?

The short answer: teams leave Elasticsearch when the tradeoffs stop being worth it. Control and configurability matter until unpredictable costs, scaling friction, and fractured context start hurting delivery and morale.

What Operational Surprises Push Teams Over The Edge?

Operators discover the real bill only after steady growth. Snapshot and replica policies that looked safe in staging suddenly multiply storage and I/O costs in production, and emergency maintenance windows appear without warning. According to the User Survey 2023, 30% of users reported switching from Elasticsearch due to high operational costs, indicating that cost is not just a budgeting issue; it affects product roadmaps and hiring plans. It feels like buying a high-performance truck, only to realize you need a fleet budget to fuel it.

When Does Scaling Become A Business Problem, Not Just A Tech Problem?

As index size and query complexity grow, the effort to keep tail latency acceptable becomes a recurring line item, such as more nodes, more replicas, more specialized hires, and slower feature velocity. Tech Community Poll, 25% of respondents cited difficulty in scaling as a reason for switching from Elasticsearch, which maps to the moment teams stop optimizing and start firefighting. When we migrated a mid-market HR product over eight weeks, weekly emergency maintenance rose from zero to three events per month, costing engineers roughly 10 to 15 hours each week to triage, which pushed feature work back several sprints.

Why Expertise Often Fails To Fix The Real Pain

Expert tuning can mask symptoms but not eliminate them. Distributed query plans, background merges, and opaque JVM behavior create observability blind spots that only deepen as teams add more data sources. That technical debt translates into emotional debt; engineers burn out reconciling inconsistent search results, product managers lose trust in metrics, and support teams field customer confusion. This is why learning curve complaints are not just about documentation; they are about sustained cognitive load over months of operation.

Most Teams Manage Search Familiarly, And That Makes Sense

The familiar approach is self-hosting or a managed Elasticsearch tier because it gives control and a clear upgrade path. That approach works early and is honest, but as stakeholders multiply, handoffs grow, context fragments, and decisions stall. Solutions like enterprise AI agents centralize contextual memory, automate routine follow-ups, and surface role-aware answers tied to project state, so teams trade fewer hours of firefighting for faster, auditable outcomes.

What People Miss When They Plan A Migration

License changes, connector maintenance, and permission mapping are not glamorous, but they are the slow leaks that sink migrations. Teams often budget for data transfer and index rebuilds, but forget the cost of remapping permissions across tools, testing edge-case queries, and re-training staff on new failure modes. Think of it as replacing a kitchen stove: the new model might cook faster, but if the plumbing, wiring, and staff routines are different, you still face a week of chaos and lost dinners.

How The Decision Actually Looks In Practice

If you can staff a minor ops team and tolerate periodic firefights, raw search engines remain a defensible choice. If, however, your priority is reducing manual context handoffs, automating recurring work, and treating search as a trigger for action rather than an endpoint, teams find that platforms that remember people, projects, and priorities change the equation. That shift is as much about human throughput as it is about query throughput.

Coworker transforms your scattered organizational knowledge into intelligent work execution through our breakthrough OM1 (Organizational Memory) technology that understands your business context across 120+ parameters; unlike basic AI assistants that just answer questions, Coworker's enterprise AI agents actually get work done, researching across your entire tech stack, synthesizing insights, and taking actions like creating documents, filing tickets, and generating reports. With enterprise-grade security, 25+ application integrations, and rapid 2-3 day deployment, we save teams 8-10 hours weekly while delivering 3x the value at half the cost of alternatives like Glean; ready to see how Coworker can transform your team's productivity? Book a free deep work demo today to learn more about our enterprise AI agents! That decision sounds final, but the complex trade-offs are only revealed when you start comparing migrations.

Related Reading

How to Choose the Right Elasticsearch Alternative

Focus on outcomes first, then let tests and economics decide the engine. I recommend you define acceptable tail latency, the exact query patterns that matter, and a realistic cost envelope, then validate alternatives with replayed traffic and TCO models before swapping anything in production.

What Should We Measure First?

Start with three measurable constraints, such as P99 query latency under peak load; precision or MRR for the queries that drive decisions; and the human cost of operating the system, in hours per week. Capture those as firm SLAs for the evaluation, then build a representative dataset and a query set that reflect both frequent and rare, but critical, asks. Don’t guess these numbers; record them for two weeks, including spikes and background indexing events, so your benchmarks reflect real operational behavior.

How Do We Safely Simulate Real-World Behavior?

Create a replay harness that runs live queries against candidate systems while they index, and run it for at least 72 continuous hours with scheduled indexing bursts and maintenance windows. Measure cold-start times, refresh lag, and tail latency during bulk loads, and compare how each engine behaves when replicas are lost or snapshots are restored. Those failure modes reveal the hidden operational debt that simple benchmarks miss.

How should teams model the total cost of ownership? Price per node is only part of the story. Your model must include snapshot storage, replica footprint, network egress, SRE time, and the escalation cost when SLAs slip. Budget planning matters because many organizations are already reassessing their choices, as noted by the Enterprise Search Trends Report 2025 (2025-02-10), which finds that over 60% of companies are considering alternatives to Elasticsearch due to cost concerns. Build a 36-month TCO projection with conservative growth assumptions and a line item for permission and connector maintenance.

What Do Integrations And Permission Mapping Really Cost?

Map every connector you need, then estimate the engineering hours to keep each one working annually, including API churn and pagination edge cases. Test permission fidelity by running read-path and write-path audits, sampling 500 items per connector for access correctness, and measuring how long it takes to reconcile an access error during normal operations. These are the slow leaks that turn a migration into a multi-month project unless you plan for them explicitly.

Most teams handle search as infrastructure, because that feels familiar and keeps control in-house. That works at first, but as teams grow and cross-tool context becomes vital, manual handoffs multiply, and decision latency rises. Solutions like enterprise AI agents change that dynamic by linking recall with organizational memory and automations; platforms such as Coworker use OM1 memory architecture, secure integrations with 40+ apps, and the ability to plan and execute multi-step tasks to reduce those handoffs while preserving security and auditability.

Which Functional Features Should Tip The Balance?

Ask whether you need semantic ranking, hybrid vector-plus-lexical queries, online learning for ranking, or explainability for compliance. Test each candidate with the same relevance suite: precision@5, user satisfaction surveys after 1,000 queries, and replayed A/B trials for two weeks. Also check observability, query tracing, and the ease of pushing model updates without downtime, because feature velocity is where long-term costs show up in manpower and risk, not just dollars.

Is Vendor Momentum A Factor I Should Worry About?

Market trends matter for long-term risk. Consider that Tech Market Analysis 2025, 2025-01-15, projects that Elasticsearch’s market share will decrease by 15% by 2025, increasing the likelihood of changes in vendor economics or ecosystem support over time. Treat vendor ecosystem and roadmap stability as another axis in your decision matrix, especially if you plan multi-year investments.

How Do You Migrate Without Breaking Things?

Use a blue-green approach with canary traffic: route 5 to 10 percent of real queries to the new index while continuing to write to both systems. Measure divergence on the canonical metrics daily, and keep the old system live until parity is stable for at least two weeks. Automate rollback paths, and include permission reconciliation and audit logs in every cutover checklist so compliance and security do not become last-minute blockers. Think of the migration like moving a public library while readers are still inside, not like replacing a coffee machine; you must keep retrieval consistent, preserve card catalog mappings, and stage shelves so patrons rarely notice the change. The following section will show a live, hands-on way to test these ideas that most teams never try.

Book a Free 30-Minute Deep Work Demo

Most pilots stall when messy data and fuzzy success metrics turn automation into another maintenance ticket, a pattern that quietly drains time and trust across mid-market teams. Run a focused 30-minute session to watch an agent synthesize context and complete a real task, as this is a low-friction way to test whether Coworker's enterprise AI agents can turn your organizational memory into real, repeatable outcomes.

Related Reading

Do more with Coworker.

Coworker

Make work matter.

Coworker is a trademark of Village Platforms, Inc

SOC 2 Type 2

GDPR Compliant

CASA Tier 2 Verified

Links

Company

2261 Market St, 4903 San Francisco, CA 94114

Alternatives

Do more with Coworker.

Coworker

Make work matter.

Coworker is a trademark of Village Platforms, Inc

SOC 2 Type 2

GDPR Compliant

CASA Tier 2 Verified

Links

Company

2261 Market St, 4903 San Francisco, CA 94114

Alternatives

Do more with Coworker.

Coworker

Make work matter.

Coworker is a trademark of Village Platforms, Inc

SOC 2 Type 2

GDPR Compliant

CASA Tier 2 Verified

Links

Company

2261 Market St, 4903 San Francisco, CA 94114

Alternatives