16 Ways to Use AI For Knowledge Management

Nov 28, 2025

Daniel Dultsin

Imagine hunting for a single answer across email, intranets, and shared drives while a decision waits on you. That every day drag shows why Knowledge Management Strategy matters: scattered documents, weak metadata, and slow search waste time and hide value. This guide gives practical, actionable strategies to immediately upgrade your organization's information handling, slashing search times and unlocking hidden insights. Hence, your teams spend less time looking and more time using what they find. What would faster access and more brilliant discovery let your people do tomorrow?

Coworker's enterprise AI agents make that shift real by layering semantic search, automated tagging, and knowledge graph linking onto your existing knowledge base, so teams find answers faster, preserve organizational memory, and surface insights you did not know were there.

Table of Contents

16 Ways to Use AI For Knowledge Management

What is AI in Knowledge Management?

Can AI Help With Unstructured Data?

Key Components of an AI Knowledge Base

How to Implement AI For Knowledge Management

Book a Free 30-Minute Deep Work Demo

Summary

Context-aware retrieval replaces brittle keyword matching and can cut search time by up to 50% according to LivePro, turning hours of document hunting into minutes of actionable answers.

Automation for curation is becoming table stakes, with LivePro estimating that 75% of organizations will use AI for knowledge management by 2025, making automated tagging and content-gap detection operational priorities.

Unstructured data dominates the problem set, as Numerous.ai projects 80% of enterprise data will be unstructured by 2025, and organizations spend an average of $5 million annually managing it, so ingestion, normalization, and entity canonicalization are essential.

When combined with robust retrieval and verification patterns, AI yields measurable gains, with InData Labs reporting a 40% increase in productivity and Zendesk noting AI knowledge bases can boost agent productivity by 40% while cutting support costs by 30%.

Governance and provenance are nonnegotiable for safe scale, since InData Labs finds AI can reduce time on KM tasks by 30% but only when answers include provenance snippets, confidence scores, role-based limits, and immutable audit logs.

Prove value with tight pilots, for example, targeting tickets where agents spend 20 to 90 minutes hunting for attachments or SOPs and running 4 to 8-week experiments that measure time-to-first-action and human correction rates.

This is where Coworker's enterprise AI agents fit in, addressing connector freshness, provenance, and context by layering semantic search, automated tagging, and knowledge-graph linking onto existing sources. Hence, teams find answers faster and preserve auditable context.

16 Ways to Use AI For Knowledge Management

AI becomes the working layer that turns stored documents into decisions you can act on, not just files you can find. Use it to cut time-to-answer, fill content gaps automatically, and connect knowledge to the systems where work actually gets done so teams stop switching contexts and start finishing work.

1. Information Retrieval

AI-driven knowledge management systems excel at locating the most relevant information rapidly from extensive data collections. Unlike traditional search methods that rely solely on keywords, AI uses advanced algorithms to understand the context and intent behind queries, ensuring that search results are not only fast but also highly pertinent to users’ needs. This greatly enhances how teams access and apply knowledge.

2. Content Creation and Curation

Managing the continuous influx of information is challenging. AI assists by automatically curating content, selecting the most relevant, up-to-date resources, and presenting them in clear, human-readable language. Beyond curation, AI generates new knowledge content by synthesizing existing data, enabling knowledge bases to grow dynamically and keeping teams informed with fresh insights.

3. Knowledge and Insight Discovery

AI uncovers hidden patterns and relationships within data that humans might miss. By analyzing complex datasets, AI helps discover valuable insights that can drive strategic decisions and innovation. This ability to reveal underlying knowledge fosters better problem-solving and competitive advantage.

4. Tagging and Classification

Manually tagging documents for categorization is tedious and slow. AI automates this process by intelligently tagging and classifying content based on meaning and context rather than just keywords. This results in a well-organized knowledge base that is easier to navigate and maintain, significantly reducing manual workload.

5. Personalized User Experiences

AI customizes knowledge management interactions based on individual user behavior and preferences. It delivers tailored content recommendations and relevant search results specific to each user’s role or interests. This personalization leads to an improved user experience, higher engagement, and more effective knowledge utilization.

6. Content Gap Analysis

AI helps identify gaps in your knowledge base by analyzing existing content and identifying missing or underrepresented information. This ongoing analysis ensures your knowledge repository grows holistically, filling crucial gaps that enhance the overall value and comprehensiveness of your information resources. It helps maintain a competitive edge by keeping content relevant and complete.

7. Knowledge Base Maintenance

Maintaining a knowledge base is simplified with AI automation. AI can automatically update outdated content, archive irrelevant data, and correct inaccuracies, ensuring your knowledge system remains accurate and trustworthy. This reduces the manual effort required for upkeep and boosts confidence in the information your team relies on.

8. Intelligent Search

AI enhances search functions by using natural language processing (NLP) and semantic search technologies. This means users can find the information they need even if they don’t use exact keywords. AI understands the intent behind queries, delivering precise, context-aware search results that improve productivity and user satisfaction.

9. Question Answering

AI-powered knowledge systems can directly answer user queries by extracting information from the knowledge base or by generating informed responses. This reduces the need for users to sift through multiple documents, delivering instant, practical answers that speed up decision-making and problem-solving.

10. Semantic Analysis and Tagging

AI advances tagging and classification by understanding the more profound meaning and context of your documents. Unlike simple keyword tagging, semantic analysis enables AI to organize content more accurately and provide richer insights, leading to better knowledge discovery and more straightforward navigation within your knowledge base.

11. Ethical and Compliance Monitoring

AI helps ensure knowledge management aligns with legal and ethical standards. It monitors data usage for regulatory compliance, flags sensitive or inappropriate content, and enforces data governance policies. This capability helps organizations avoid costly legal risks and maintain trustworthiness in handling information.

12. AI-Powered Chatbots and Intelligent Assistants

Conversational AI tools, such as chatbots, provide users with a natural way to interact with the knowledge base. Instead of typing complex search queries, users can ask questions in a conversational style and receive immediate, context-aware responses. This enhances accessibility and user engagement while reducing frustration with traditional search methods.

13. Predictive Analytics for Knowledge Trends

AI can analyze historical data to forecast future knowledge needs and trends. By anticipating what information users will require or what market developments could affect content relevance, organizations can proactively update and expand their knowledge bases. This predictive aspect enhances strategic planning and resource allocation.

14. Automated Workflow Integration

AI integrates knowledge management with other organizational workflows and tools. For example, it can automate updating documentation based on project management activities or customer interactions. This seamless integration streamlines processes, keeps knowledge up to date, and reduces redundancy across systems.

15. Continuous Learning and Adaptation

AI-powered knowledge systems continuously learn from user interactions and new data inputs. This adaptive nature enables them to improve over time, becoming more accurate in delivering relevant information and insights. Continuous learning ensures the knowledge management system evolves alongside the organization’s changing needs.

16. Robust Security and Data Privacy Controls

AI empowers knowledge management with advanced security features to safeguard sensitive information. It applies encryption, access controls, and anomaly detection to prevent unauthorized access and data breaches. Protecting data privacy while leveraging AI ensures compliance and preserves stakeholder confidence.

How Do Teams Find The Correct Information Fast?

AI replaces brittle keyword matching with context-aware retrieval, so queries return the exact procedure, ticket, or snippet you need, even when you phrase it conversationally. LivePro’s 2025 finding that "Knowledge management systems powered by AI can reduce search time by up to 50%" shows why this matters: cutting search latency turns hours of hunting into minutes of action. One pattern I see across support portals and engineering wikis is this failure mode: attachments and embedded files go dark in keyword searches, and troubleshooting repeatedly stalls until someone digs through emails or downloads.

How Should Content Be Curated And Generated To Remain Useful?

Treat AI as both editor and reporter. Have it surface the most recent, high-confidence sources, synthesize them into clear how-to steps, and flag contradictions for human review. Use automated content gap analysis to expose missing SOPs or stale troubleshooting guides, then route those gaps as prioritized work items. According to LivePro, "75% of organizations are expected to use AI for knowledge management by 2025."That level of adoption means automation for curation stops being optional and becomes part of how teams scale consistent outcomes.

What Happens To Workflows As Knowledge Needs Scale?

Most teams manage escalations with fragmented threads and manual handoffs because that approach is familiar and needs no new approvals. As projects and stakeholders multiply, context splinters, response times expand, and people repeatedly re-create the same answers. Teams find that platforms with persistent memory for projects and roles centralize context, enable multi-step reasoning across connected tools, and execute tasks like drafting emails or filing tickets, compressing review cycles while keeping full audit trails.

How Do You Keep AI-Driven KM Accurate, Compliant, And Trusted?

Start with provenance and access controls, not just model outputs. Log which documents fed a response, attach confidence scores, and restrict actions by role so automation cannot change sensitive records without approval. Monitor drift by sampling answers weekly and tying AI corrections back into the content pipeline so the system learns from real fixes. This approach preserves compliance and makes the system auditable, which enables knowledge to scale safely rather than becoming a liability.

Where Should You Pilot AI for The Fastest Payoff?

Pick a high-frequency pain point, for example, the set of tickets where agents spend 20 to 90 minutes hunting for attachments or SOPs, and connect those exact sources first. Index attachments deeply, enable natural-language question answering, and measure time-to-first-action and ticket reassignments. Start small, validate reduction in context switching, then expand connectors and automated flows to other teams. Think of it like upgrading a librarian who not only knows which shelf holds the book but also remembers who last edited it, which chapter changed, and can hand you the corrected passage ready to use. That fixes a lot; what breaks next is where things get unexpectedly complicated, and you will want to know why.

What is AI in Knowledge Management?

AI transforms knowledge management when teams treat it like an operational system, not a one-off project. You get durable processes for tagging, validating, and routing knowledge so answers stay accurate as the organization changes, and people can act on what the system returns with confidence.

What Operational Problems Does Ai Actually Fix?

This pattern appears across product marketing and support groups: knowledge is scattered across Notion, Gong, Drive, and Slack, fragmenting context and creating duplicate work. It feels like looking for a single wire in a server rack and pulling the wrong plug. AI helps by automating the complex plumbing that maps who owns what, surfacing the freshest source for a fact, and reducing the noise from low-value fragments so people can spend their attention on interpretation, not hunting.

How Should Teams Govern And Measure An AI-Driven KM System?

Start by measuring what changes for people, not models. Track time-to-first-action, error rate on routed tasks, and the rate at which flagged gaps become assigned work. According to InData Labs, "AI can reduce the time spent on knowledge management tasks by 30%. In 2025, this implies routine KM overhead can be cut substantially, but only if you pair automation with clear feedback loops and human verification at decision points. Add provenance metadata and periodic audits to every answer so you can trace a recommendation back to the source document and the confidence threshold that produced it.

Which Technical Patterns Actually Matter In Production?

Hybrid retrieval, incremental indexing, and connector health checks matter more than the flavor of your LLM. Build embeddings with consistent chunking, keep a sparse index for exact-match signals, and run nightly re-indexing spikes to capture rapid updates. When teams split capability into modular agents, reliability improves; for example, use one agent for read-only retrieval and another, with approval gates, for actions that alter systems. That constraint-based split reduces accidental writes while preserving automation where it helps most. Organizations that make these architectural choices consistently report significant productivity gains, as shown in InData Labs, "Organizations using AI for knowledge management report a 40% increase in productivity. 2025, indicating that the right patterns scale both speed and output quality.

Most teams handle knowledge with manual scripts and shared drives because they are familiar with them. This works until projects and stakeholders multiply, at which point errors and handoffs balloon. Platforms like enterprise AI agents capture project state, roles, and priorities across hundreds of dimensions and connect to 40–50 apps, enabling end-to-end workflows rather than forcing people to piece them back together. That shift cuts the logistic labor of coordination, not by replacing judgment, but by removing the busywork that steals it.

How Do You Get People Actually To Use The System?

Run focused 6 to 8-week pilots on one high-friction workflow, show measurable wins, then expand. Embed micro-prompts where people already work, make suggested actions reversible, and staff a rotating champion to triage low-confidence responses. When teams see that a recommended email or ticket update is accurate and easy to revert, trust grows quickly; when it is brittle, adoption collapses faster than you can say rollout. Think of KM as a nervous system: sensors collect signals, processors decide relevance, and outputs trigger action. If any link is weak, the whole organism compensates by overworking humans. Strengthen the weakest link first, instrument it, then iterate. The messy truth is that what looks like progress often hides brittle edges, and those edges always show up when the system is asked to act.

Related Reading

Can AI Help With Unstructured Data?

Yes. AI does more than label documents; it extracts the signals buried inside messy text, audio, and images and turns them into structured, actionable facts you can trust and use. That capability is nonnegotiable given how information is distributed today, since Numerous.ai, 2023, "80% of enterprise data will be unstructured by 2025."

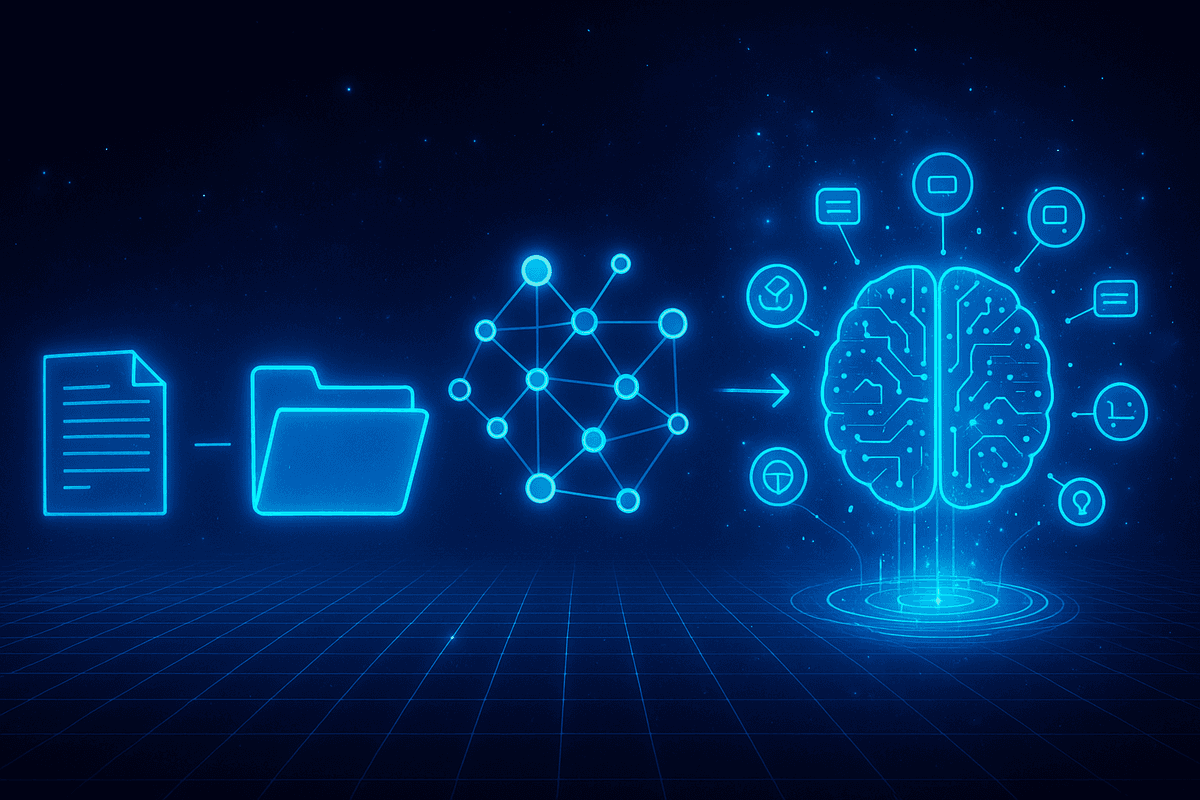

How Do Teams Actually Turn Noise Into Facts?

Treat the work as a simple processing pipeline with clear gates. First, ingest and normalize input types with quality checks, for example, OCR confidence thresholds for scanned notes and transcript alignment for voice. Next, create semantic representations, usually sentence or paragraph embeddings, and run hierarchical clustering to group related content. Then apply entity linking and canonicalization so different phrases resolve to the same business object, and finally attach provenance metadata and a human review gate before anything changes a system of record. Think of it like building a map from satellite photos: you stitch images, correct distortions, tag rivers and roads consistently, and only then hand the map to the driver.

What Breaks When You Do The Obvious Thing?

Chunking without context loses the thread, and that loss creates hallucinations downstream. This pattern appears across transcript and ticket projects. Initial LLM classification often hangs around 30 percent without prompt optimization and embedding strategies, so teams get brittle results that frustrate rather than help. The technical fixes are precise: use overlapping semantic windows, coarse-to-fine retrieval where a lightweight index filters candidates and a heavier model verifies them, and a reranker tuned on your holdout set. Add an independent verifier agent that checks assertions against canonical sources before any automated action proceeds.

Why Accuracy And Provenance Matter More Than Raw Recall?

If a system returns every possibly relevant sentence but cannot say which source supports the claim, humans will ignore it. Store source location, extraction confidence, and a short interpretable snippet with every answer, and instrument feedback so reviewers can mark false positives and have those corrections feed back into the reranker. Run nightly drift checks on embedding distributions and connector freshness; small API changes or a shift in language use will silently erode precision unless you surface them.

Most teams manage unstructured data with ad hoc scripts and manual tagging because that is low friction at first, and it works until scale introduces repeated rework and costly audits. The real cost shows up quickly for organizations with heavy content flows, since Numerous.ai, 2023, "Organizations spend an average of $5 million annually on managing unstructured data." Platforms like enterprise AI agents provide the bridge, centralizing connectors and a persistent memory of projects and roles so teams reduce manual reconciliation while keeping auditable provenance.

How Should You Validate An Approach Before Wider Rollout?

Set two baselines: a human review workload metric and a correctness metric tied to business actions. For example, measure the percent of extracted entities that require human correction and the time from question to actionable guidance. Use A/B tests on ticket routing or knowledge-based responses, hold out a realistic sample for reranker tuning, and require that any automation that writes to a system meet a high-confidence threshold plus an approval step. Those controls let you expand automation without trading safety for speed.

Where To Prioritize Effort If You Have Limited Time Or Budget?

Start with the highest-friction sources where human time is currently spent reconciling content, such as voice transcripts for support triage or emailed contracts that block deals. Use prebuilt connectors, focus on a single vertical workflow for a 4 to 8-week pilot, instrument tightly, and measure the reduction in human review. Embedding and hierarchical clustering usually yield usable topics quickly, and prompt optimization often converts an unusable 30% output into something your team will rely on.

A Short Analogy To Keep In Mind

Treat unstructured data like hay bales in a barn, not a stack of neatly labeled boxes; you can try to sort by hand, but the efficient move is to run a baler that compacts, tags, and wires each bale so the forklift can fetch exactly what the operator needs. Coworker transforms your scattered organizational knowledge into intelligent work execution through our breakthrough OM1 (Organizational Memory) technology that understands your business context across 120+ parameters, unlike basic AI assistants, which just answer questions.

Coworker's enterprise AI agents actually get work done by researching across your entire tech stack, synthesizing insights, and taking actions like creating documents, filing tickets, and generating reports. With enterprise-grade security, 25+ application integrations, and rapid 2-3 day deployment, Coworker saves teams 8-10 hours weekly while delivering 3x the value at half the cost of alternatives like Glean, so book a free deep work demo today to see how it fits your workflows. That progress feels like a breakthrough, but the next bottleneck is more structural and much harder to solve.

Related Reading

Key Components of an AI Knowledge Base

An AI knowledge base succeeds when its components not only find and summarize facts, but prove them, act on them safely, and retire them when they stop being true. The essential parts tie outputs to human review and auditable actions.

Data Sources and Integration

A fundamental element of an AI knowledge base is its ability to connect with multiple data sources. This integration ensures the knowledge base remains comprehensive and up-to-date by continuously refreshing information from databases, documents, customer interactions, and external systems. The integration layer facilitates seamless connection to tools such as CRMs, IoT devices, and ticketing systems, enhancing the richness and relevance of the content available for AI processing.

Intelligent Search

Intelligent search capabilities set AI knowledge bases apart by focusing not only on keyword matching but on understanding user intent, context, and language nuances. This enables the system to deliver precise, relevant results that truly address users' questions or problems. Through machine learning and natural language processing (NLP), the search engine interprets synonyms, phrasing variations, and query nuances to guide users to the needed knowledge efficiently.

Natural Language Processing (NLP)

NLP is essential for allowing users to interact with the knowledge base in everyday human language. This technology processes natural language queries and provides contextual, conversational responses rather than simple keyword-based results. It enhances user experience by enabling the system to comprehend and generate language, recognize entities and intents, and maintain context across interactions, especially valuable in customer service and support scenarios.

Automated Content Curation and Tagging

This component involves the AI’s ability to automatically organize, prioritize, and tag content within the knowledge base. By using algorithms to curate content, the system ensures that information remains structured, easy to navigate, and relevant. Automated tagging helps categorize knowledge assets accurately, improve searchability, and enable the knowledge base to scale efficiently without manual intervention.

Machine Learning Algorithms and Models

Machine learning (ML) algorithms enable the AI knowledge base to learn from user interactions and data patterns continuously. These algorithms predict user needs, improve response accuracy over time, and adapt dynamically to changing conditions. ML models may analyze historical data to anticipate trends, personalize content recommendations, and support decision-making processes, enhancing overall knowledge management effectiveness.

Personalization Features

Personalization tailors the knowledge base experience to individual user preferences and behaviors. By tracking interactions, previous searches, and user profiles, AI customizes content delivery to meet unique needs. This targeted approach increases user satisfaction and efficiency by presenting information in the most relevant and accessible way for each user.

Analytics and Reporting

Analytics play a critical role by providing actionable insights into how users engage with the knowledge base and how content performs. Reporting tools track metrics such as popular queries, content gaps, and user satisfaction. These insights enable continuous optimization of the knowledge base, ensuring that it evolves based on actual usage patterns and business goals.

How Do You Make Answers Verifiably Accurate?

During an eight-week support pilot, agents pushed for blunt, unfiltered answers. They repeatedly tested edge cases, revealing that accuracy is as much a process as it is a model quality. Build a claim-level verifier that checks generated assertions against canonical sources before anything is returned, attach short provenance snippets and an extraction confidence, and require an explicit human approval path for any suggestion that will change a system of record. Include an independent reranker tuned on holdout queries, and log every verification step so reviewers can trace where a recommendation came from and why it was accepted or rejected.

How Should Systems Detect And Respond To Connector Failures?

A recurring failure mode is stale connectors that silently feed insufficient data. Treat connectors like live sensors, not one-off imports: maintain a health dashboard with heartbeat checks, schema validation, and synthetic query tests that run hourly. When a connector fails, circuit-break it to a read-only snapshot and notify the owner, while a graceful fallback serves cached, time-stamped results with a freshness flag. Think of this as an air-traffic control pattern, where you never route a plane without a confirmed runway report.

What Lifecycle Rules Keep Content Reliable At Scale?

If content has no owner, it decays fast. Assign explicit owners with SLAs for review, set automated retirement rules for unused or superseded documents, and require a lightweight human verification step before resurrecting archived content. Use periodic, prioritized content audits driven by usage analytics so high-impact pages get attention first, and surface unresolved contradictions as assignable tasks rather than passive warnings.

Which Governance Controls Actually Prevent Costly Mistakes?

The truth is, governance is the mechanism that converts speed into safety. Implement policy-as-code that enforces role-based access controls, automatically redacts PII at ingestion, and maintains immutable audit logs for every automated write. Pair those controls with a staged release model: read-only preview, sandboxed action with rollback, and finally, fully automated updates once confidence and human review metrics pass defined thresholds.

Most teams coordinate knowledge by emailing experts and hoping someone replies, because it works for small loads and needs no new approvals. As ticket volume and dependencies grow, response latency balloons, single points of expertise burn out, and decisions stall in private threads. Platforms like enterprise AI agents change the pattern by routing context-rich tasks, preserving audit trails, and orchestrating approvals, so answers become completed work with fewer handoffs.

How Do You Reduce Human Rework And Build Real Trust?

Teams reject outputs that need heavy editing; they want high-fidelity responses that can be used with minimal post-processing. Solve for actionability: present short, copy-ready snippets with an explicit list of suggested actions, always make suggested edits reversible, and expose an easy feedback button that pushes corrections back into the training pipeline. According to Zendesk (2025), AI knowledge bases can increase agent productivity by 40%, but that productivity only materializes when answers are both accurate and immediately applicable. Combine that with cost accountability, and the business case becomes clear, as Zendesk (2025) notes: companies with AI knowledge bases see a 30% reduction in support costs.

Which Monitoring Metrics Tell You You’re Winning?

Track time-to-action, percent of responses that required human correction, connector freshness, and the rate at which flagged contradictions become assigned work. Use small A/B tests to validate changes, and require measurable improvement in human review load before expanding automation to riskier workflows. That sounds solved, until you try to deploy this reliably across legacy systems and mixed data types. What actually makes real-world rollouts succeed or fail?

How to Implement AI For Knowledge Management

Implementing AI for knowledge management means running three connected efforts at once: clean, versioned data; reproducible model workflows with human review gates; and operational metrics that value time saved and safe actions. Start with prioritized pilots that prove accuracy and automation thresholds, then expand those repeatable patterns across teams.

What Should You Audit First?

Begin with a focused intake audit that maps content owners, connector health, and query failure modes over a two-week window. Measure query types, average time-to-first-action, and the percent of searches that return no authoritative source. Also tag the sources that contain 80 percent of the high-impact answers you need, because targeting the small set of documents that drive most decisions reduces scope and speeds wins. This prioritization matters now more than ever, since according to Gartner (2023, "By 2025, 80% of enterprises will have adopted AI-driven knowledge management solutions." Adoption timelines will compress vendor and integration choices.

How Do You Structure Data So Models Behave Reliably?

Use a three-layer schema: canonical entities, curated content slices, and a provenance layer that captures source, timestamp, and extraction confidence. Run an initial automated cleaning pass to remove duplicates and normalize formats, then conduct a short human review in which owners verify critical mappings within 7 to 14 days. For high-change sources, schedule nightly incremental indexing; for stable knowledge, schedule weekly refreshes. Treat tagging as living metadata, not a one-time project: require owners to approve automated tags for a 30 to 60-day training window so the model learns your language and you build trust.

Which Experiments Prove The System Works?

Design A/B tests that compare time-to-resolution and edit overhead, not just search relevance. Track the proportion of answers that go straight into action without edits, and the human correction rate per 1,000 queries. Use a canary rollout with a sandbox agent that can suggest actions but must be approved before any write, then move to a staged approval pipeline once automatic accuracy exceeds your threshold. That blunt focus on downstream work, rather than perfect summaries, is how teams show real ROI, and that matters because McKinsey & Company, 2023, "Companies using AI for knowledge management report a 30% reduction in time spent searching for information", which directly ties to time reclaimed for value work.

How Do You Govern Models And Maintain Trust?

Create explicit policy-as-code for acceptable action types, PII handling, and approval gates. Instrument every response with a provenance snippet and an extraction confidence, and log the complete verification chain for audits. Set an error budget that limits the monthly number of high-risk automated writes; if the budget is hit, revert to read-only mode and require a manual remediation sprint. Run weekly synthetic query tests across edge cases and keep a connector health scoreboard with automatic circuit-breakers for stale feeds.

What Operational Practices Prevent The Usual Failures?

Treat connectors like live sensors with heartbeat checks and synthetic queries, and keep a shadow index for rollback when schema drift occurs. Separate retrieval and action agents so retrieval can be permissive, while action always requires higher confidence and approval. Keep one staging environment that mirrors production metadata and runs nightly replays, so you can spot silent drift before it reaches users. Log every human override so corrections feed directly into reranker training batches on a fixed cadence.

Why Teams Get Frustrated, And How To Avoid That

Most teams rely on generic assistants because they are quick to set up. That approach feels familiar, but as query types and stakeholders multiply, hallucinations and brittle context responses create distrust. Teams find that the hidden cost is not the initial setup time; it is the ongoing rework and loss of trust when a recommendation is wrong. Solutions like enterprise AI agents change that pattern by capturing project state and connecting to many apps, preserving context while enabling reversible actions and audit trails, which lets organizations scale automation without sacrificing safety.

How Do You Keep People Engaged With The System?

Make the first wins obvious and low risk. Route high-confidence suggestions directly into existing workflows as pre-filled drafts that require one-click approval, and publish the time saved per task in team dashboards. Staff a rotating steward for four to six weeks to triage low-confidence items and convert them into training data. That human loop converts skepticism into momentum; when teams see fewer edits and faster execution, adoption follows.

A short operational checklist you can run in week one

Map the 10 highest-value documents and their owners.

Run 48-hour connector health and synthetic query tests.

Launch a sandbox agent tied to a single workflow with an approval gate.

Define error budget and a rollback plan.

Instrument dashboards for time-to-action and human correction rate.

Think of the system as a rail network, not a single station: signals must be reliable, tracks aligned, and switches tested before you let a complete train pass. That next step has a hidden test most teams skip, and it changes whether your pilot becomes permanent or fizzles out.

Book a Free 30-Minute Deep Work Demo

Most teams accept manual back-and-forth as the default because it feels safer, yet that habit quietly eats clarity and momentum. Let’s run a focused pilot that tests an AI for a knowledge management approach with platforms like Coworker, using enterprise AI agents to validate governance and safe automation on a single workflow. This dress rehearsal proves the choreography before you commit to broader change.

Related Reading

Do more with Coworker.

Coworker

Make work matter.

Coworker is a trademark of Village Platforms, Inc

SOC 2 Type 2

GDPR Compliant

CASA Tier 2 Verified

Links

Company

2261 Market St, 4903 San Francisco, CA 94114

Alternatives

Do more with Coworker.

Coworker

Make work matter.

Coworker is a trademark of Village Platforms, Inc

SOC 2 Type 2

GDPR Compliant

CASA Tier 2 Verified

Links

Company

2261 Market St, 4903 San Francisco, CA 94114

Alternatives

Do more with Coworker.

Coworker

Make work matter.

Coworker is a trademark of Village Platforms, Inc

SOC 2 Type 2

GDPR Compliant

CASA Tier 2 Verified

Links

Company

2261 Market St, 4903 San Francisco, CA 94114

Alternatives